.png)

For financial institutions, AI is no longer a side project—it has become an integral part of daily operations. But the real differentiator isn’t just having AI; it’s having AI that understands context. When systems can’t connect the dots—between a customer’s history, market shifts, and real-time activity—they miss opportunities, create risks, and fail to deliver the returns leaders expect.

The organizations pulling ahead are those using AI with built-in intelligence: systems that can read situations like a human, adapt when things change, and make sense of complexity rather than being overwhelmed by it.

This blog explores why context-aware AI is now critical for enterprise success and how it’s helping banks deliver better customer experiences, tighter fraud protection, stronger compliance, sharper risk assessment, and leaner, faster operations.

The Contextual Gap: Where AI Falls Short

Picture a talented junior analyst at a bank. They’re sharp with numbers, fast with reports, and can follow instructions perfectly. But there’s a catch: every time you give them a task, they start from scratch. They forget the meeting yesterday, they don’t recall the client’s history, and they can’t connect today’s market news to tomorrow’s lending decision. Would you trust them to handle your most critical calls?

This is the “contextual gap” in AI. It’s the blind spot where machines excel at isolated tasks but fail to see the story behind the data. They can spot a sudden spike in spending but can’t tell if it’s fraud or a holiday trip. They can approve a loan based on a score but can’t sense a shift in the customer’s business environment. They can answer a query but can’t remember the last conversation that gave it meaning.

The contextual gap shows up in four big ways:

.jpg)

- Data Without Meaning – Traditional AI knows the facts but not the reasons. A flagged transaction is just an anomaly, not a reflection of travel plans, life events, or market trends.

- No Memory – Each interaction is an island. Systems don’t remember past interactions, so customers repeat themselves and opportunities for richer insights are lost.

- Silos Everywhere – Credit scoring models, fraud engines, chatbots, and risk tools often live in their own worlds, unaware of each other. Decisions miss the bigger enterprise picture.

- Frozen in Time – Rule-based models struggle with change. They weren’t built to handle new regulations, new fraud tactics, or sudden market shifts without costly rework.

Bridging this gap means giving AI something more human: awareness. A banker doesn’t just look at numbers; they remember a client’s story, scan the market horizon, and adjust advice when life changes. Context-aware AI does the same—combining memory, integration, and adaptability to make decisions that feel informed and intuitive.

For banks and financial enterprises, closing this gap isn’t just about efficiency; it’s about trust, speed, and staying competitive. Without context, AI is a tool. With context, it becomes a partner.

What Does “Context-Aware AI” Mean?

Context-aware AI is about seeing the bigger picture. It’s not just about processing data; it’s about understanding the circumstances around it.

Think of it as an AI that doesn’t just read words but also understands tone, intent, and history. It remembers previous interactions, factors in cultural or market nuances, and pulls relevant information from across the organization—similar to how an experienced banker recalls a client’s story before offering advice.

While early AI tools handled isolated questions, context-aware systems go deeper, grasping both what is being said and why. For enterprises, this represents a shift from narrow automation to intelligent, situational decision-making that drives real business value.

Traditional AI vs. Context-Aware AI in Banking

Early AI systems gave banks tools like chatbots and rule-based fraud detectors, which worked well for simple tasks but had a critical flaw: they lacked memory and adaptability. Each transaction or customer query was handled as a separate event, with little understanding of the bigger picture. A chatbot could answer a balance inquiry, but if the customer asked about a previous loan discussion, it would fail to connect the dots.

Modern context-aware AI changes this. It learns and adapts over time, maintaining continuity across interactions and building a profile for each customer. When a client chats with the bank’s virtual assistant today, it “remembers” last week’s mortgage question and can follow up intelligently. It even detects sentiment and tone, delivering a more natural, human-like experience.

The same applies to fraud detection. A rule-based system might block a large overseas transaction simply because it looks unusual. But a context-aware system considers the customer’s travel patterns, recent spending behavior, and account activity before deciding whether it’s suspicious. This reduces false alarms and catches complex fraud that static systems would miss.

In short, the difference is like comparing a camera that only takes still shots to one that records and interprets a full video. Context-aware AI adds depth, memory, and adaptability. Here’s how it stacks up across key areas:

.jpg)

Across each area, context-aware AI moves banks from isolated actions to intelligent, holistic decision-making. The result? Better customer experiences, smarter risk control, and a level of efficiency traditional systems simply can’t match.

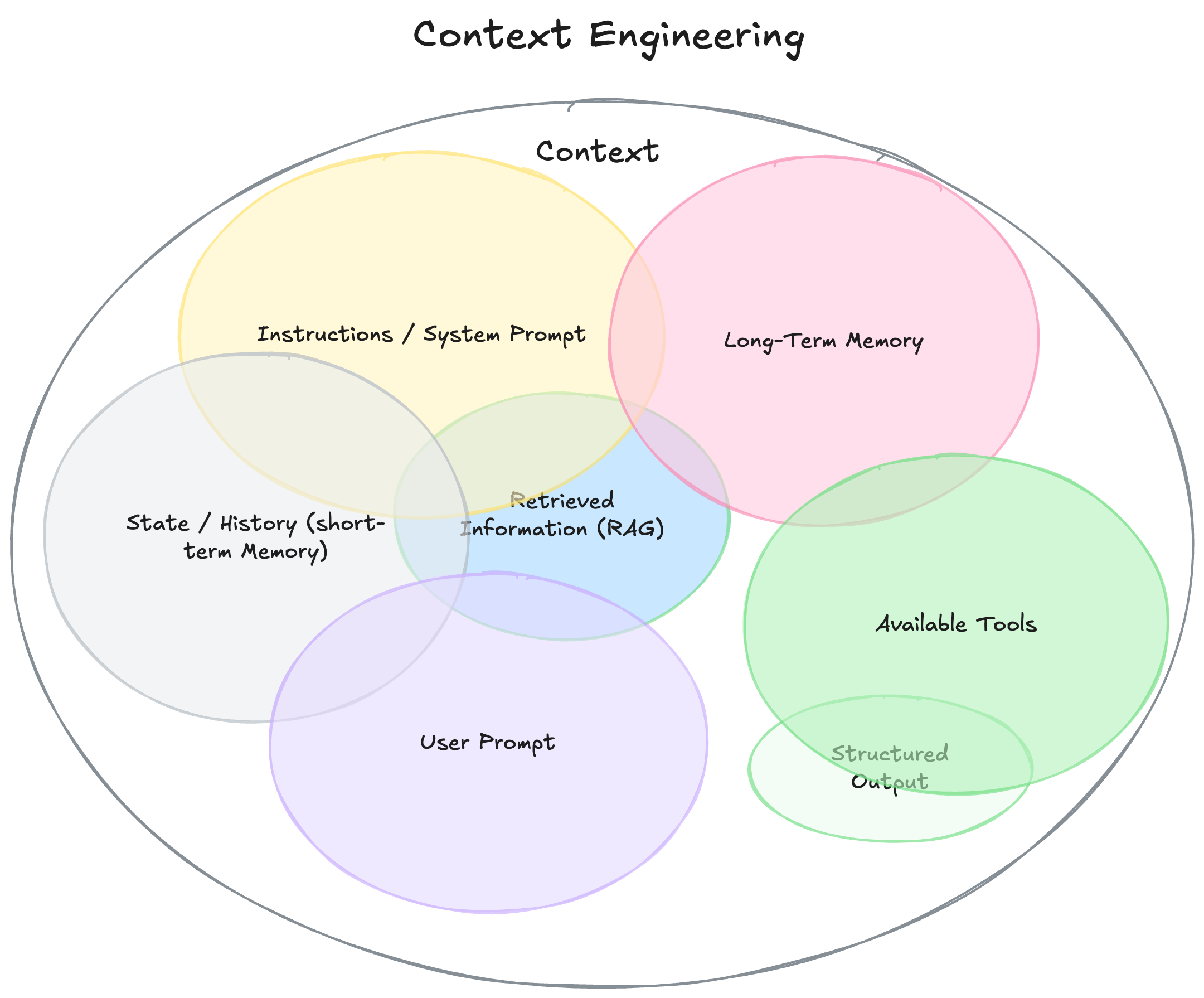

Core Components of Context-Aware AI Systems

.jpg)

Modern context-aware AI systems rely on several foundational components that provide memory, knowledge, and multi-faceted understanding:

- Contextual Memory

Allows AI to remember and reuse past information, rather than treating each interaction as new. This includes short-term conversation history and long-term knowledge stores. In banking, it ensures a chatbot doesn’t keep asking the same questions and provides continuity across sessions.

- Knowledge Graphs

Structured networks of entities (customers, accounts, transactions) and their relationships. They break down data silos and provide a “single source of truth.” In finance, they are critical for fraud detection, compliance, and risk analysis because they reveal connections that aren’t obvious in isolated data.

- Vector Databases & Embeddings

Store and retrieve unstructured information (documents, emails, transcripts) as vectors for semantic search. This powers Retrieval-Augmented Generation (RAG), where models fetch the most relevant pieces of knowledge before answering. For banks, it means accurate, up-to-date responses instead of static or outdated information.

- Multi-Modal Integration

Combines different data types—text, numbers, images, audio—into one context. This lets AI analyze a customer call transcript alongside account data, or review scanned ID documents with transaction records. It mirrors how human analysts use multiple data sources to form judgments.

Key Technologies for Building Context-Aware AI

Transformer-Based Language Models

At the core of context-aware AI are transformer-based language models, the architecture behind GPT, BERT, and domain-specific models like FinBERT. Transformers excel at analyzing relationships within text using self-attention, which allows them to consider all parts of an input when generating responses. This gives them strong natural language understanding and the ability to produce coherent, human-like output.

In enterprises, transformers act as the “reasoning engine.” Out-of-the-box, however, they face two limits: (1) a fixed context window (they can only “remember” a certain amount of input at once) and (2) knowledge restricted to what was seen in training. To make them useful in banking, they are paired with external memory systems like vector databases and knowledge graphs. These feed relevant facts into the model so it can ground responses in up-to-date and institution-specific knowledge.

Recent advances are pushing boundaries: new LLMs support context windows of 100k+ tokens, enabling them to process hundreds of pages in one go. Combined with multimodal inputs, these models can analyze contracts, financial statements, and even charts in a single session. In practice, transformers serve as the core intelligence, while surrounding architectures supply the context needed to perform reliably in enterprise environments.

Retrieval-Augmented Generation (RAG)

RAG is an approach that combines retrieval and generation to make AI more contextually accurate. Instead of relying only on what an LLM “remembers” from training, RAG systems actively fetch relevant data when a question is asked. The process is straightforward: documents are first broken into chunks and stored as embeddings in a vector database. When a query comes in, the system retrieves the most relevant chunks, inserts them into the model’s prompt, and the model generates a response that is explicitly grounded in those documents.

Read more: Comparing LLM Fine-Tuning, Retrieval-Augmented Generation, and MCP (Tool Integration)

This pattern is transformative for enterprises. It reduces hallucinations, keeps outputs aligned with official policies, and enables AI to answer questions about very recent or proprietary data without retraining. For example, a customer might ask about wire transfer fees. A RAG-powered assistant retrieves the latest fee schedule from the bank’s knowledge base, injects it into the prompt, and delivers an accurate, up-to-date response — possibly even citing the source.

The business advantage is clear: policies or data can be updated in the index instantly, giving the AI access to new knowledge in real time. RAG effectively turns LLMs into domain-aware, reliable query engines over enterprise knowledge.

Hybrid and Knowledge-Enhanced Approaches

While transformers and RAG are powerful, some enterprise scenarios demand deeper reasoning and transparency. This is where hybrid approaches come in, blending machine learning with symbolic methods like rules or knowledge graphs. A leading example is Graph-augmented RAG (GraphRAG), which retrieves not only text but also a subgraph of connected entities relevant to the query. This allows AI to perform multi-hop reasoning — such as tracing money flows through linked accounts — that would be impossible with text alone.

For banks, this is crucial. Fraud detection often depends on seeing relationships between accounts, transactions, and entities. GraphRAG can surface those links, while the LLM explains them in natural language. Equally important, this structure creates explainability: the system can show the reasoning path (the graph connections) behind its conclusion. In compliance and risk management, such transparency is non-negotiable.

Beyond graphs, neuro-symbolic hybrids use a combination of ML and rule-based reasoning. For example, an ML model might flag suspicious transactions, and a rule engine applies regulatory logic to confirm if they match known laundering patterns. This combination improves accuracy and ensures that outputs are interpretable — a must in regulated industries. Hybrid AI makes context-aware systems both powerful and trustworthy.

Conclusion

Context-aware AI is reshaping how financial institutions deploy intelligence, shifting from static, narrow tools to dynamic systems that can remember, reason, and adapt. By combining long-term memory layers like vector databases and knowledge graphs with powerful transformer-based models and orchestration methods such as RAG and hybrid pipelines, banks can build solutions that are more accurate, explainable, and aligned with real-world complexity. We already see this in fraud detection systems that piece together scattered clues, virtual assistants that recall customer histories for personalized service, compliance bots that audit in seconds, and risk models that fuse expert rules with live data. The result is AI that doesn’t just respond but understands — offering decisions that are grounded, contextual, and transparent.

Delivering these systems requires a blend of disciplines: data engineering to construct knowledge stores, machine learning to fine-tune models, software engineering for integration and security, and domain expertise to ensure regulatory and financial rigor. The payoff is significant: banks gain a corporate memory that enhances human expertise, breaks down silos, and provides traceable reasoning that builds trust with regulators and stakeholders. Looking ahead, advances in multimodal models, real-time knowledge graphs, and “context as a service” platforms will only accelerate this shift. In an industry where timing and precision are critical, context-aware AI is emerging as a true strategic asset — enabling safer operations, sharper insights, and deeper customer relationships.

.png)

.png)

.png)

.png)

.svg)