.png)

A troubling trend, agent washing, is emerging, falsely positioning conventional software and automation as advanced autonomous agents. This deception could be problematic for any sector, but it’s especially problematic for financial institutions, including banks, insurance companies, NBFCs, investment firms, and more.

Financial institutions are already highly regulated, and any new technology in this sector must undergo strict oversight. If a false model is responsible for credit underwriting or document fraud detection, the financial institution is exposed to compliance risks, and client trust could be undermined.

An Overview of Agent Washing

In our article, “How to Spot and Avoid Agent Washing in Enterprises,” we’ve compared this phenomenon with “greenwashing,” another term for companies’ tactics of marketing products as green or environmentally friendly.

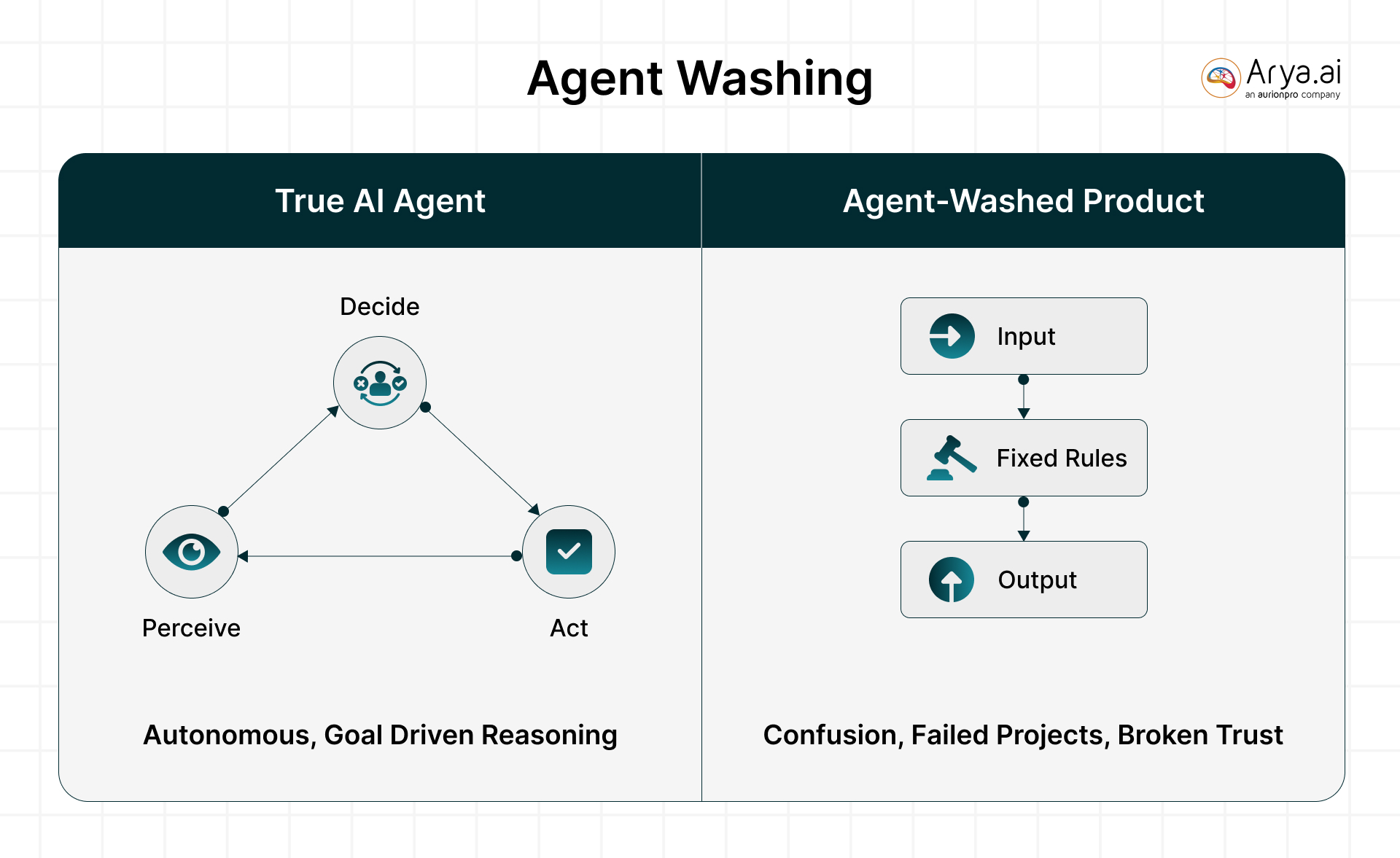

Similarly, agent washing is when companies overhype or misrepresent a product as having artificial intelligence or autonomous “agent” capabilities when it does not. Instead of true AI, these offerings are often just conventional software, simple algorithms, large language model (LLM) chatbots, or robotic process automation (RPA) repackaged with an “AI” label.

A genuine AI agent should be able to perceive, decide, and act toward goals with minimal human intervention. Agent-washed products lack these capabilities. They might automate a fixed workflow or respond to basic inputs, but they don’t exhibit true autonomous reasoning. Agent washing isn’t just harmless marketing fluff – it sets the stage for confusion, failed projects, and broken trust in AI solutions.

AI Adoption Risks in Financial Institutions

We must first highlight the many risks of adopting AI into core workflows. This will set the context for why an off case of agent washing can make things worse for Financial institutes.

.jpg)

Banks and other financial firms operate under strict regulatory and risk management regimes, so any new technology (especially AI) is scrutinized for potential downsides. Key AI-related risk factors in finance includes:

Regulatory Scrutiny & Compliance

Financial regulators keep a close watch on AI’s use in financial workflows. This is primarily due to the fact that AI has been attributed to concerns regarding data privacy, fairness, bias, consumer protection, and more. It’s clear why regulators want to ensure that when banks use AI, they can justify and audit the decisions that it has made.

Model Risk Management

A bank relying on a model for credit scoring or asset pricing, the risk management framework should be solid enough not to cause financial losses or compliance breaches. AI and machine learning models add complexity to this task, as they may be non-transparent “black boxes” or continuously learning, making validation and oversight challenging.

Data Quality & Governance

Financial datasets can be massive but also messy, biased, or unrepresentative. Ensuring proper data governance (accurate, unbiased data collection, processing, and storage) is critical. Poor data can lead to model errors (e.g., falsely flagging innocent transactions as fraud or overlooking risky loans).

Ethical and Fairness Concerns

If an AI system behaves unfairly or unethically, a financial institution could face not just public backlash but also regulatory action under laws for consumer protection and anti-discrimination. Maintaining client trust is paramount in finance, and any AI-driven scandal (like an “AI robo-advisor” losing clients’ money due to a flaw, or an “AI loan model” redlining communities) can severely damage a firm’s reputation.

This means innovation isn’t really possible without setting proper guardrails. If any new automation masked as an innovative solution becomes a part of the core workflows, risks get compounded. Basically, it pours fuel on an already challenging fire.

How Agent Washing Exacerbates AI Risks in Finance

AI agents introduce misleading claims and obscure the true nature of a tool, which undermines risk controls and leads banks astray in the following ways:

Misleading Claims and False Assurance

When a vendor or internal team overstates the intelligence or autonomy of a system, it can create a false sense of security. Financial institutions may believe they have a cutting-edge AI handling a task and thus lower their guard. In reality, if the tool is just a simplistic automation, it may fail to handle edge cases or novel risks.

For example, an “AI-powered” fraud detection system that is actually a static rules engine could miss new fraud patterns, while giving managers unwarranted confidence. This gap between promised and actual capabilities means critical issues (fraud, errors, compliance violations) can go undetected until damage is done.

Reduced Transparency in Decision-Making

Agent washing often goes hand-in-hand with a lack of transparency. If a product’s inner workings are obscured by buzzwords, banks can have trouble understanding how decisions are actually made. In some cases, vendors may refuse to fully disclose their algorithms under the guise of proprietary “AI” technology.

This opacity makes it difficult for the bank’s risk managers or auditors to verify the system’s logic. Moreover, if a system is falsely labeled as AI, bank staff might assume its operations are too complex to question, further reducing scrutiny. This is perilous in finance, where auditability is crucial. Regulators expect clear explanations for why, say, a transaction was flagged as suspicious or a customer was denied credit.

Compliance Violations and Model Governance Gaps

By misleading management about what a tool actually is, agent washing can cause institutions to mishandle compliance and model risk management. If a bank believes a vendor’s product is a proven AI system, it might deploy it without the rigorous validation normally required for new models.

An agent-washed solution might slip through these controls if it’s not recognized as a “model” or if its capabilities are taken at face value. The danger is that the tool could be flawed or used inappropriately, leading to errors that breach regulations.

Reputational and Trust Damage

Trust is a bank’s greatest asset – trust from customers, and trust from regulators that the institution is safe and sound. Agent washing can severely erode both. Internally, if an AI tool fails to live up to promises, it can embarrass leadership and delay genuine innovation.

Externally, if clients discover that a bank’s “AI-driven robo-advisor” or “intelligent chatbot” was mostly hype, they may lose confidence in the institution’s tech savvy and integrity. Worse, regulators who feel misled will doubt management’s credibility.

Misallocation of Resources and Opportunity Cost

Agent washing can also inflict a more immediate financial wound: wasted investments. Financial institutions might pour significant budget into acquiring or implementing a system sold as “next-gen AI,” only to get mediocre performance equivalent to much cheaper legacy software.

These sunk costs can be substantial, including licensing fees, integration expenses, and training time, with little to show for it. Furthermore, if the tool underperforms, the institution must spend additional resources on remediation: fixing errors, handling backlogs (e.g., if a compliance tool falsely flagged thousands of transactions), or even paying regulatory penalties if issues weren’t caught.

How Financial Institutions Can Guard Against Agent Washing

Given the higher stakes, banks and financial firms should adopt a proactive stance to identify and avoid agent washing. Here are several recommendations to protect against this risk:

Build AI Literacy

A strong first line of defense is education. Firms should invest in AI literacy for executives, managers, and frontline teams so they understand what AI can and cannot do. This includes learning basic AI concepts, capabilities, and limitations. With greater knowledge, staff are less likely to be dazzled by buzzwords or unrealistic claims.

Demand Transparency and Proof from Vendors

Financial institutions should insist on full transparency when evaluating AI products, especially from third-party vendors. Ask the vendor to explain how the system works, what techniques it uses, what data it needs, and what its limitations are. Reputable providers should be able to articulate their methodology and provide evidence of their tool’s performance.

Focus on Measurable Outcomes

When assessing AI tools, keep the focus on tangible performance metrics. Define what business outcome or risk metric you need improved (e.g., higher fraud detection rate, lower false positives, faster loan processing) and ask whether the tool demonstrably delivers on that.

Strengthen Internal Validation and Auditing

Treat any new AI or “intelligent” tool as you would a new critical model, with rigorous validation and testing. This means subjecting the system to independent review by your model risk or validation team. Before fully deploying the tool in production, conduct sandbox testing, stress tests, and bias checks. Verify that it meets necessary regulatory requirements and your own risk criteria.

Continuous Monitoring and Evolution

Even after deployment, remain vigilant. Monitor the performance of AI tools over time to ensure they continue to deliver and behave as expected. Set up key risk indicators or metrics (e.g., error rates, override rates, drift in outcomes) that might signal the tool is underperforming or being misused. Maintain an open dialogue with solution providers for updates.

Foster a Culture of Pragmatism

Finally, create a culture where honesty in technology capabilities is valued. Not every use case requires innovative solutions. Sometimes, a simple OCR would do the trick. The real benefit of any solution is driving tangible outcomes. A PII masking model does not have to be fancy; it should simply mask the digits.

Conclusion

Financial institutions operate on trust, and it is the very thing that agent washing jeopardizes. While the allure of AI is strong and real intelligent systems can indeed revolutionize finance, falsely labeling tools as “AI agents” is a dangerous shortcut. It exacerbates existing AI risks by blurring oversight, inviting regulatory trouble, and undermining the very improvements that genuine AI can bring.

Banks and insurers must therefore approach AI with both enthusiasm and caution: perform due diligence, insist on truth over hype, and integrate new technologies into robust risk management frameworks. By doing so, they can reap the benefits of AI innovation while avoiding the costly trap of agent washing – ensuring that when they do deploy “intelligent” systems, those systems are the real deal, with rewards that outweigh the risks.

.png)

.png)

.png)

.svg)